The Agile Database Techniques Stack: The Dev Side of DataOps

- Agile database techniques stack

- Why is this a stack?

- Why adopt the agile database techniques stack?

- What is the best way to adopt the agile database techniques stack?

- Can you adopt individual techniques?

- Agile database techniques and DataOps

1. The Agile Database Techniques Stack

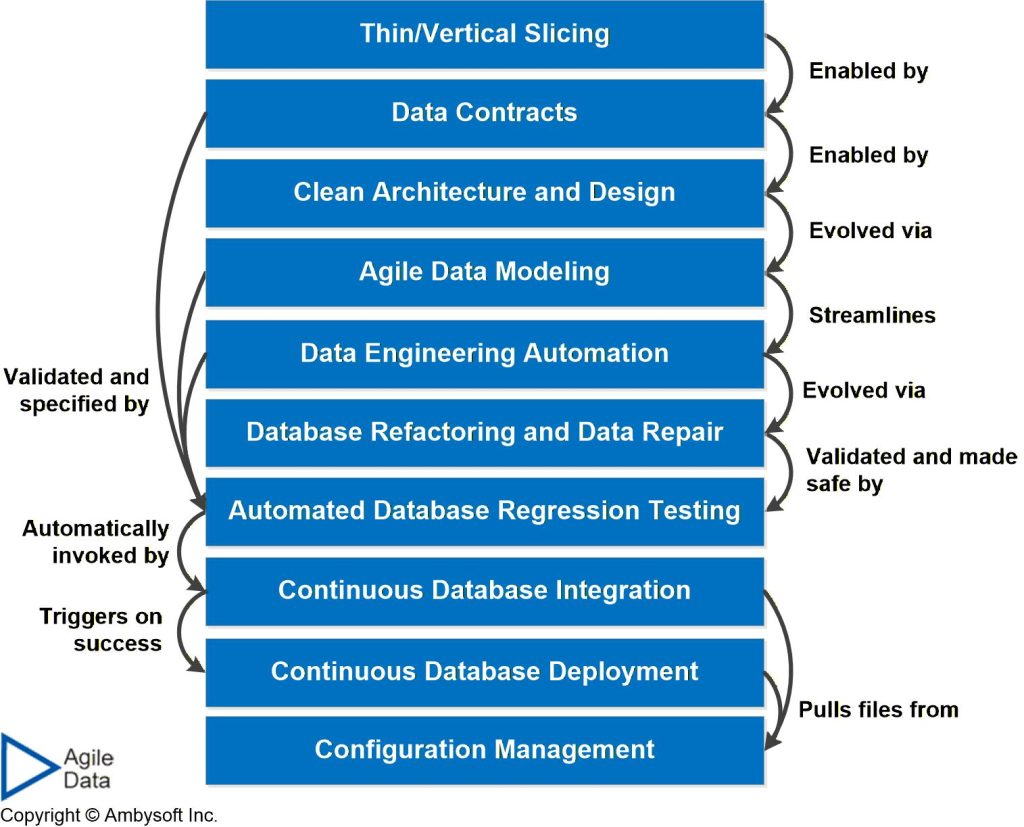

The techniques stack is overviewed in Figure 1 below.

Figure 1. The Agile Database Techniques Stack (click to enlarge).

These strategies of the agile database techniques stack are:

- Thin slicing. A fundamental agile development technique is to thinly slice functionality into small, consumable pieces that may be potentially deployed into production quickly. These vertical slices are completely implemented – the analysis, design, programming, and testing are complete – and offer real business value to stakeholders. Thin slicing is completely applicable, and highly desirable, in data development.

- Data contracts. A data contract is a formalized agreement or specification that outlines the structure, format, and data semantics of data being exchanged. Data contracts establish clear expectations and responsibilities for data quality, indicating how data should be produced, transmitted, and used. Data contracts are applied programmatically to application programming interfaces (APIs) or other encapsulation strategies to ensure the quality of data being passed through them.

- Clean data architecture and clean data design. A clean data architecture strategy enables you to develop and evolve your data assets at a pace which safely and effectively supports your organization – in short, to be agile. Similarly, a clean database design enables you to evolve specific data assets in an agile manner.

- Agile data modeling. With an evolutionary approach to data modeling you model the data aspects of a system iteratively and incrementally. With an agile data modeling approach you do so in a highly collaborative and streamlined manner.

- Database engineering automation. This refers to two kinds of automation. First, automated support for data engineering tasks through better tools used by data engineers. For example, improved data profiling tools or data modeling tools. Second, outright automation of significant data engineering activities. For example, a tool that profiles an existing data source, maps it to a Data Vault 2 (DV2) raw vault schema, and generates the extract-load-transform (ELT) functionality required.

- Database refactoring and data repair. A database refactoring is a small change to your database schema which improves its design without changing its semantics (e.g. you don’t add anything nor do you break anything). The process of database refactoring is the evolutionary improvement of your database schema so as to better support the new needs of your customers. A data repair is a small fix to data that addresses a data quality problem. The process of data repair is the evolutionary improvement of your data quality.

- Automated database regression testing. You should ensure that your database schema actually meets the requirements for it, and the best way to do that is via testing. With a test-driven development (TDD) approach, you write a unit test before you write production database schema code, the end result being that you have an automated regression test for your database schema. to ensure data quality.

- Continuous database integration (CDI). Continuous integration (CI) is the automatic invocation of the build process of a system. As the name implies, continuous database integration (CDI) is the database version of CI.

- Continuous database deployment (CDD). Continuous database deployment (CDD) is the database version of continuous deployment (CD). In CD, when your continuous integration (CI) run succeeds, your changes are automatically promoted to the next environment. CDD is the automated deployment of successfully built database assets from one environment/sandbox to the next, accounting for the implications of persistent data.

- Configuration management. Your data models, database tests, test data, and so on are important artifacts that should be under configuration management, just like any other artifact.

2. Why is This a Stack?

We call it a stack because each technique relies on you being able to perform the ones below it. For example, Figure 2 shows that continuous database integration (CDI) requires you to have a configuration management strategy and will automatically invoke your CDD infrastructure if it exists.

Figure 2. How the techniques rely on each other (click to enlarge).

3. Why Adopt The Agile Database Techniques Stack?

Any given technique has advantages and disadvantages, including the ones overviewed in this article. Every given practice works well in some situations, and may even be the “best” that you can do in those situations, but doesn’t work well in others. Practices are contextual and should be presented as such. Presenting something as a “best practice” is deceptive, in my opinion. To prove my point, the advantages and disadvantages of each agile database technique are summarized in the following table.

| Technique | Advantages | Disadvantages |

| Thin slicing |

|

|

| Data Contracts |

|

|

| Clean Data Architecture |

|

|

| Clean Database Design |

|

|

| Agile Data Modeling |

|

|

| Automated database engineering |

|

|

| Database Refactoring |

|

|

| Data Repair |

|

|

| Automated Database Regression Testing |

|

|

| Continuous Database Integration (CDI) |

|

|

| Continuous Database Deployment (CDD) |

|

|

| Database Configuration Management |

|

|

4. What is the Best Way to Adopt the Agile Database Techniques Stack?

There isn’t a single, “best” way. It depends on the context of the situation that you face. Here are some potential strategies to consider:

- Small, incremental improvements. Treat the techniques stack like an improvement target, making small changes to work towards it. You’re very likely doing data architecture, database design, data modeling, some database testing, configuration management of some assets, and may even be improving the implementation of some of your legacy data sources. So start evolving your current way of working (WoW) to leverage ideas captured in the various techniques of the stack. I highly suggest that anyone interested in how to effectively improve via small steps to take a look at PMI’s Guided Continuous Improvement (GGI).

- Take a top-down approach. When you adopt a technique, you’ll find that you need to adopt at least some aspects of the technique that it immediately relies on. This continues recursively until you reach the bottom of the stack. In some ways you’ll be adopting “vertical slices” of the overall techniques stack. What I mean by this is that you’ll adopt just enough of each technique to get some value, then adopt some more of it, and so on.

- Take a bottom-up approach. This makes sense from a technical point of view, and it’s certainly easier, it tends to be difficult from a management point of view. The techniques towards the top of the stack tend to have the best short-term payback whereas the techniques towards the bottom have a longer-term payback. Starting bottom up, you’re effectively starting with the hardest strategies to justify (at least in organizations struggling to operate with a value-driven mindset rather than a cost-driven one).

- Take a middle-out approach. Some people choose to start with the more technically interesting techniques, particularly database refactoring and automated database regression testing, often because those are most likely to be new to them and to their organization. Other people will focus on improving what they’re already doing, data modeling and database design, but adopting more effective agile approaches. Either way, you will still need to swiftly adopt techniques lower on the stack.

- Adopt everything at once. This can be chaotic because in effect it’s a large change for your data group. As pointed out above, the top-down strategy tends to quickly evolve into this one anyway, although perhaps via “vertical slices” of the overall technique stack.

The list above is ordered by my personal preference, which is driven by what I have found to work in practice. But it really does depend on your situation, one approach does not work in all situations. There are no best practices.

5. Can You Adopt Individual Techniques?

Yes.

Each technique offers value on its own. However, because they build on each other, as you saw in Figure 2, and in the previous section, they are more effective when adopted together.

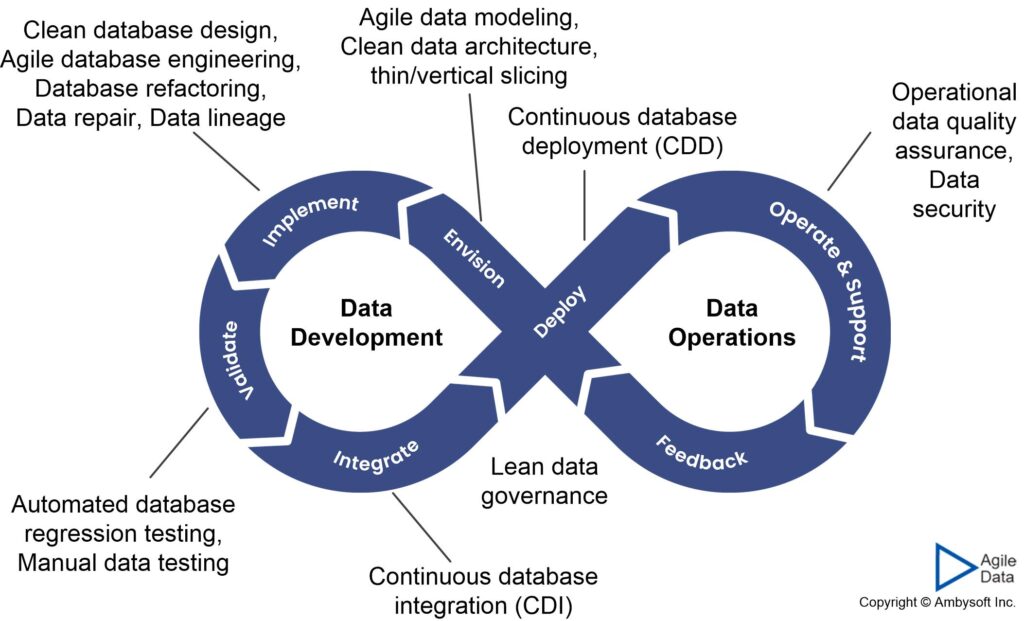

6. Agile Database Techniques Stack and DataOps

Figure 3 depicts the DataOps lifecycle, the mobius loop, with a collection of data and database techniques mapped to its activities. The figure includes several techniques that are not called out by the agile database techniques stack, such as data lineage and data security. This is because the agile database techniques stack is a subset of the agile data techniques available to you, capturing the ones most critical to the development side of loop.

Figure 3. Mapping data techniques to the DataOps lifecycle (click to enlarge).

7. Related Resources

- Clean Database Design

- Data Debt: Understanding Enterprise Data Quality Problems

- Guided Continuous Improvement (GGI)

- Introduction to DataOps: Bringing Databases Into DevOps

- Questioning the Concept of “Best Practices”: Practices are Contextual, Never “Best”