Data Repair: Fix Production Data at the Source

The idea of data repair is simple: You fix a data quality problem at its source. When I say source, I mean the actual data source, typically a production database. By fixing the problem at the source, we can reduce data debt across our organization as we no longer need to address the data quality problem every time we want to work with the data.

This article works through the following topics:

- Example data repairs

- Why data repair?

- Risks associated with data repair

- Potential data repairs

- Implementing a data repair

- Data repair in context

- Data repair and DataOps

- Related resources

1. Example Data Repairs

Figure 1 depicts the before and after picture of a PhoneNumber data field. In the before version phone numbers are stored as strings, sometimes with formatting and sometimes without. In the after version the formatting information, such as spaces and parenthesis are removed and all numbers are captured using a common format.

Figure 1. Example data repair: Introduce common format to a phone number data field.

| Before | After |

| (416) 967-1111

905.555.1234 647 345-6789 4165551212 +1 905 987 6543 |

4169671111

9055551234 6473456789 4165551212 9059876543 |

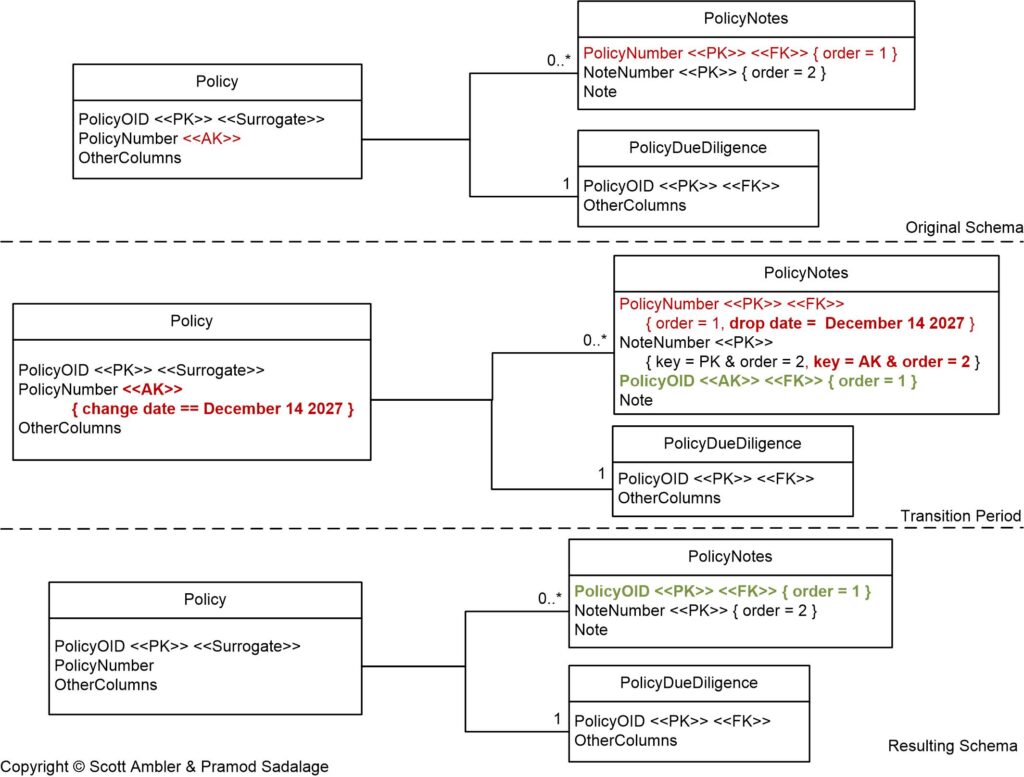

In Figure 2 we see a more complex example of data repair. In this case we’ve decided to consolidate the key strategy for Policy entities. In the original schema we’re using PolicyOID as the primary key of Policy, our preferred strategy. However, PolicyNotes uses PolicyNumber, a unique business identifier as the key. We’ve decided to replace this key with PolicyOID to increase consistency across our design. We’ll explore the need for a deprecation period later in this article when we discuss how to implement data repairs.

Figure 2. Example data repair: Consolidate key strategy (click to enlarge).

2. Why Data Repair?

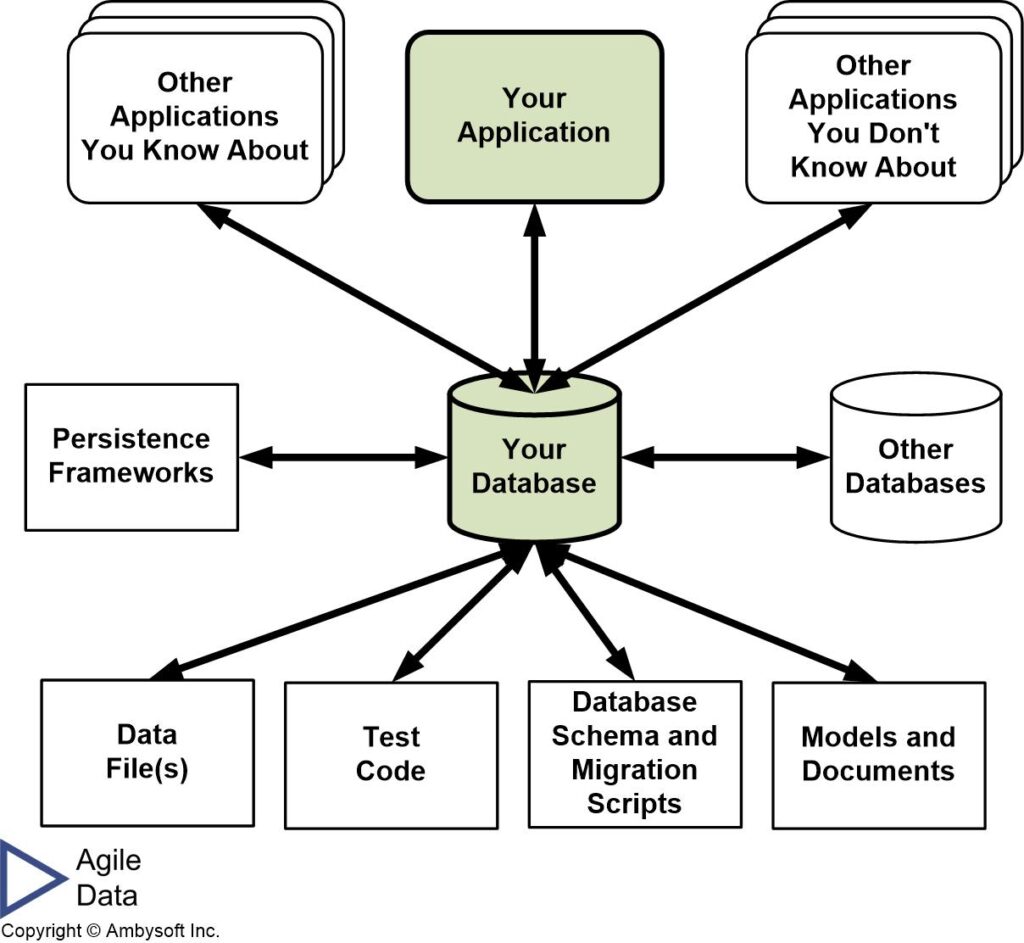

The primary benefit of data repair is that it fixes data quality problem once at the source. Consider the implications of Figure 3. When there is a data quality problem in the database any client system that works with that data will need to either address the problem or suffer the consequences. In short, the effort required to address the problem is implemented in multiple places. This is clearly wasteful and increases overall technical debt within your organization. It may also be the case that the problem isn’t addressed consistently which will likely compound the data quality problem.

Figure 3. Systems are coupled to your database (click to enlarge).

3. The Risks Associated with Data Repair

One word: Coupling.

The challenge is that databases are highly coupled to the client systems that access that data. This is depicted in Figure 3. Due to this coupling, any changes to either the database schema or to the semantics of the values stored in the database may cause problems within the client systems. Database refactorings can impact the database schema, data repairs, the topic of this article, can impact the data semantics.

You are able to make changes to your application/system code that reflect any changes that you make to the data in the database. That’s straightforward. In many environments there could be dozens, or even hundreds, of other systems that also work with the database. You’re likely not allowed, or even capable, of updating all of the code in those other systems. And this assumes you know about every system that works with the database, a potentially false assumption.

4. Potential Data Repairs

Table 1 summarizes a collection of common data repair types. Each type data repair is described, common tradeoffs (good and bad) of applying it, and an example of that data repair type provided.

Table 1. Potential data repairs.

| Data Repair Type | Tradeoffs | Example Implementation (click to enlarge) |

| Add Lookup Table. Create a lookup table for an existing column.

Often applied in combination with Apply Standard Codes. |

|

|

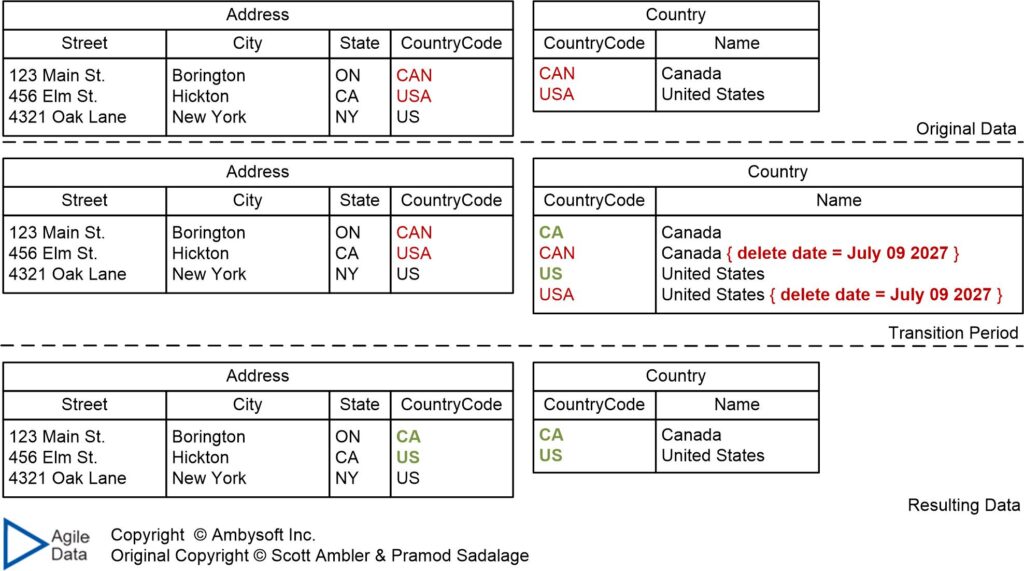

| Apply Standard Codes. Apply a standard set of code values to a single column to ensure that it conforms to the values of similar columns stored elsewhere in the database.

Often applied in combination with Add Lookup Table. |

|

|

| Apply Standard Type. Ensure that the data type of a column is consistent with the data type of other similar columns within the database. |

|

|

| Consolidate Key Strategy. Choose a single key strategy for an entity and apply it consistently throughout your database. |

|

|

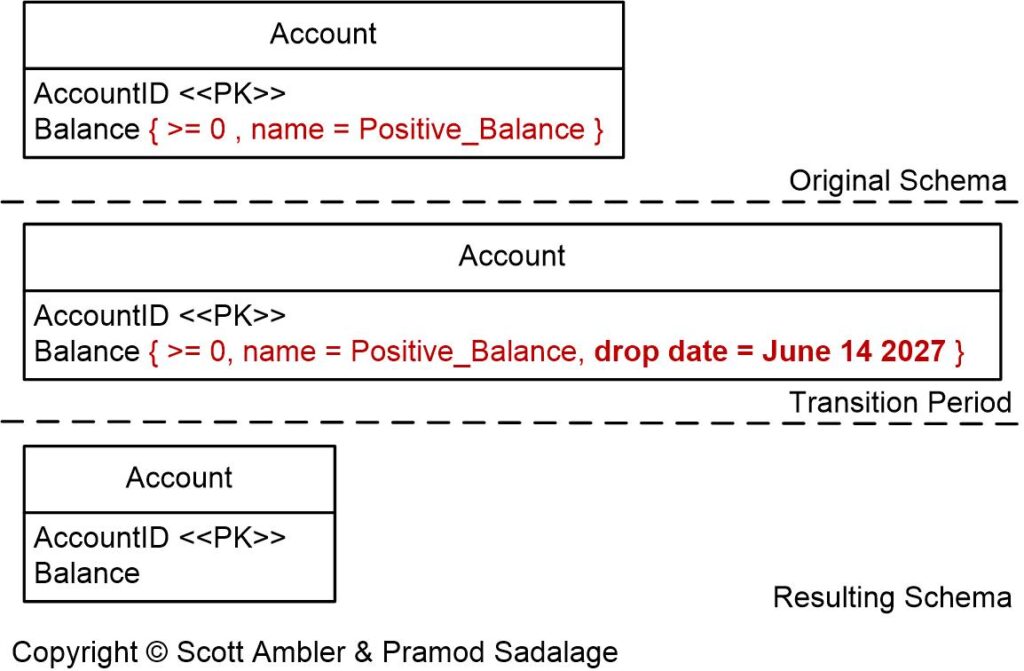

| Drop Column Constraint. Remove a column constraint from an existing table. |

|

|

| Drop Default Value. Remove the default value that is provided by a database from an existing table column. |

|

|

| Drop Non-Nullable Constraint. Change an existing non-nullable column such that it accepts null values. |

|

|

| Introduce Column Constraint. Introduce a column constraint in an existing table. |

|

|

| Introduce Common Format. Apply a consistent format to all the data values in an existing table column. |

|

|

| Introduce Default Value. Let the database provide a default value for an existing table column. |

|

|

| Make Column Non-Nullable. Change an existing column such that it does not accept any null values. |

|

|

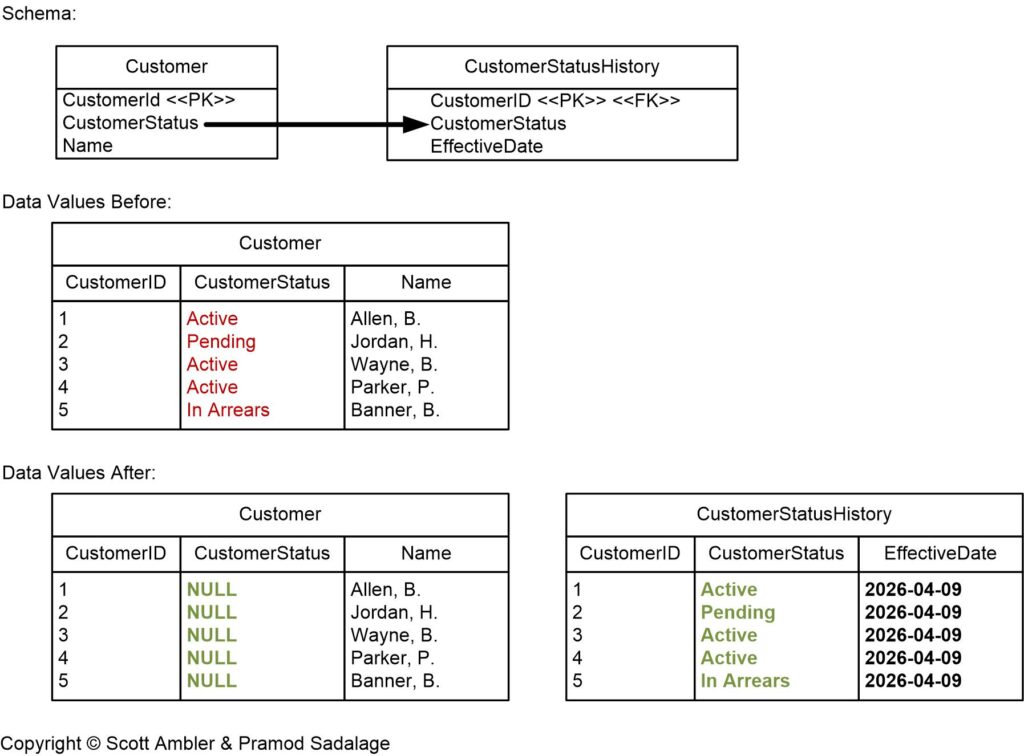

| Move Data. Move the data contained within a table, either all or a subset of its columns, to another existing table. |

|

|

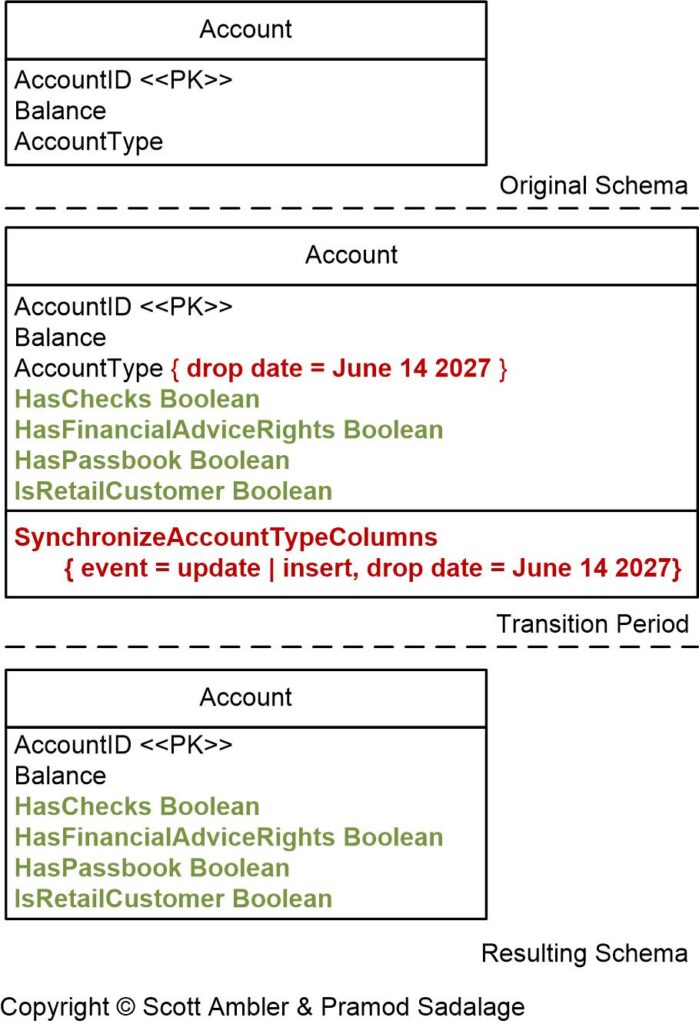

| Replace Type Code. Replace a code column with individual property flags, usually implemented as Boolean columns, within the same table column. |

|

|

5. Implementing a Data Repair

The Introduce Common Format data repair of Figure 1 is straightforward, at least on the surface (potential risks with data repair are discussed earlier). However, as you saw with Consolidate Key Strategy of Figure 2 this isn’t always the case. Very often you need a period of time where you have the original “broken” portion of your database schema running in parallel to the fixed version. This is to give the owners of the client systems that work with the data sufficient time to update, validate, and deploy their system to work with the fixed data. During this transition period one of two things must happen:

- The fix is announced by not yet implemented. Some data repairs, such as Drop Column Constraint, require a period of time where the teams responsible for the client systems are given time to update and test their systems to support the repaired data. In this case they’re given a date in the future where the data repair will be deployed. They must deploy their changes on or before this date. If they deploy their updated system before the date that the data repair will be deployed then their code must work with both the original approach, in this case a column constraint on Account.Balance, and the new approach (no column constraint). If they deploy their updates at the same time that the data repair is deployed then the two teams will need to coordinate to ensure this works out.

- The fix is implemented and run in parallel with the original version. Some data repairs, such as Consolidate Key Strategy, can be implemented immediately as long as the original data is supported in parallel. In this case the existing key, PolicyNotes.PolicyNumber, is retained even though the desired key, PolicyNotes.PolicyOID, has been implemented. The advantage of the database supporting both versions in parallel is that the client system teams are provided the flexibility to deploy their updates when it makes the best sense for them. If all client system teams successfully deploy their updates before the transition period ends then it’s possible to move the date of finalizing the repair forward.

Figure 4. Some data repairs require a transition period where the original and new schemas run in parallel (click to enlarge).

5.1 Data Repair and Database Refactoring

In the past these data repairs were captured as Data Quality Refactorings. However, they were refactored out of the database refactoring practice but this was a misnomer because some repairs could potentially improve the semantics of the data being repaired. By teasing the DQ refactorings out into it’s own practice, data repair, we achieve the following benefits:

- The importance of data repair is made clear. Repairing data quality problems at the source should be, and is, a named practice.

- Database refactoring becomes “pure”. A refactoring, of your database or otherwise, is a change that improves the design without changing its semantics. Many data repairs, by their very definition, change the semantics. The remaining 50+ database refactorings do not change the semantics.

6. Data Repair in Context

The following table summarizes the trade-offs associated with data repair and provides advice for when (not) to adopt it.

| Advantages |

|

| Disadvantages |

|

| When to Adopt This Practice | When you are responsible for (i.e. “own”) a data source and there are problematic data fields that are used by multiple clients. Like database refactoring, choosing to fix an existing data source should be your preferred strategy to address data quality problems. |

Figure 5 depicts the data quality technique comparison factor ratings for the data repair technique. The reasonings for each factor score are:

- Timeliness. Data repair tends to be a mostly reactive activity – you run into a data quality problem, something that may have been there for years, and you decide to fix it.

- DataOps automation. Although there is tooling to help you with data repair, this tooling is typically used at the time that the data repair is performed. However, in situations where there are client systems that are still breaking the data that you’re hoping to fix, you may decide to run the repair logic on a regular basis until the point in time that the client system is fixed.

- Effect on source. Data repair directly fixes the source data.

- Benefit realization. You see immediate benefit from fixing the source data.

- Required skills. Data repair is something that can be taught to data professionals relatively easily. Some coding skill is often required for more complicated repairs. An understanding of the data, in particular how it is used, is also required to ensure the repair is made correctly.

Figure 5. Rating the data repair practice by technique comparison factors (click to enlarge).

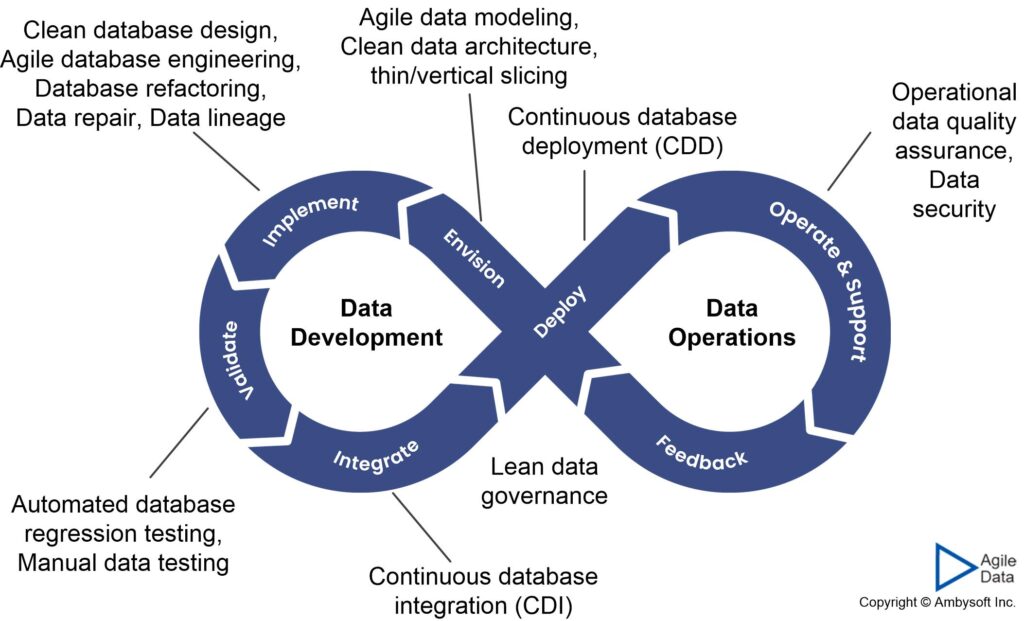

7. Data Repair and DataOps

Data repair is an important implementation technique from the point of view of DataOps. The higher the quality of your data, the easier it is to understand and work with, thus enabling more streamlined DataOps.

Figure 6. The DataOps lifecycle (click to enlarge).

8. Related Resources

- The Agile Database Techniques Stack

- Data Debt: Understanding Enterprise Data Quality Problems

- Data Quality in an Agile World

- Database Refactoring

- Introduction to DataOps